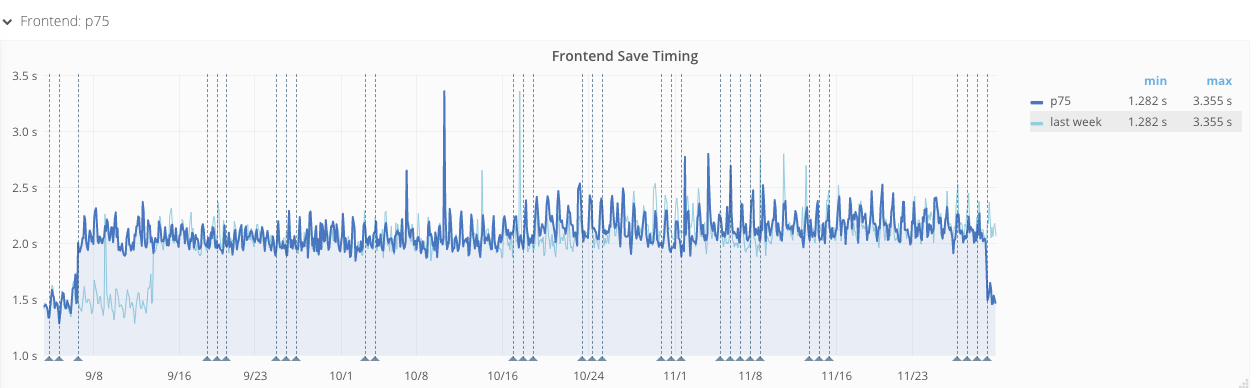

Frontend Save Timing

| Median (coal) |

|---|

| Median (statsd) |

|---|

| p75 (statsd) |

|---|

Median:

- June 2018 and earlier: ~850ms

- around 5 July 2018: Increased to ~900ms and remained raised.

- around 8 August 2018: Increased again, to ~950ms and remained raised.

- around 21 August 2018: Increased again, to ~1,000ms and remained raised.

- around 5 September 2018: Increased again, to ~1,400ms and is still raised.

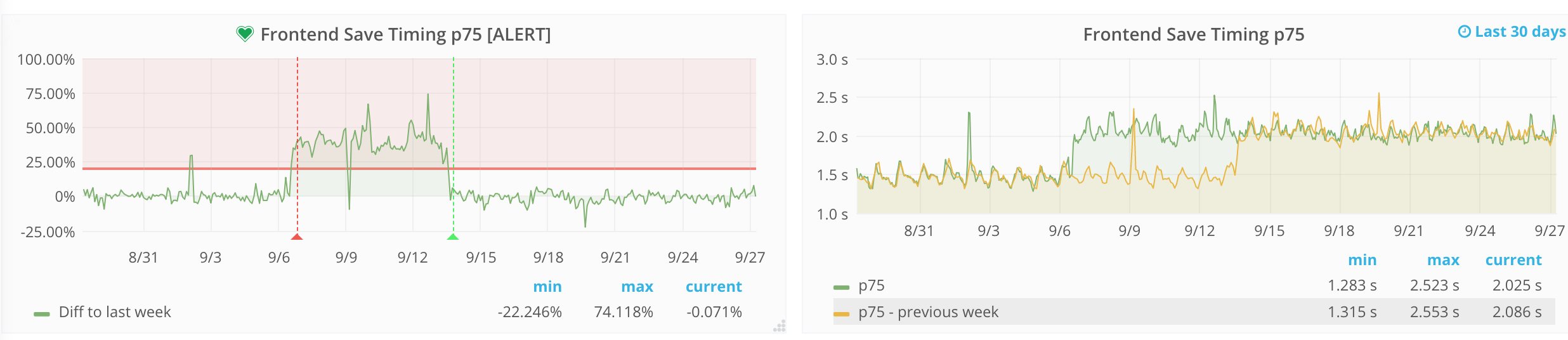

Backend Save Timing

p75;

- 27 June and earlier: ~530ms.

- around 5 July 2018: Increased to ~630ms.

- around 16 July 2018: Decreased back to ~400ms for a few days (?)

- around 8 August 2018: Increasing to ~650ms.

- around 5 September 2018: Increasing over 3 days upto ~850ms.