Background

One of, if not the, most expensive procedures during edit "stash" and edit "save" requests is the page rendering (Parser invocation). It has happened numerous times that an extension directly or indirectly causes the input to be parsed additional times, which significantly slows down the person's interaction with the site.

I believe there have been others, and for the record, the latest (T288639) was in Aug 2021.

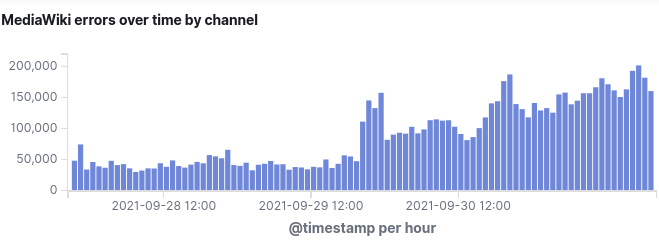

Since September 2021 we have a Logstash dashboard for the DuplicateParse logging bucket: https://logstash.wikimedia.org/app/dashboards#/view/11bb9170-1bd2-11ec-85b7-9d1831ce7631

Monitoring

I don't know if we can completely disallow this (e.g. throw an exception when it happens, and catch it early during testing/deployment), but that seems like an "ideal" to explore first.

Short of that, the next thing we can do is detect it at run-time and issue a warning against it (e.g. trigger_error, similar to what we do with Hard-deprecations and PHP's own deprecation warnings), plus a diagnostic message into a relevant logger channel with details about how and what is going on (e.g. stack of first parse, and whatever is different between them that led to a second parse).

This warning will ensure CI fails if it happens during any of the simulated edits from PHPUnit or Selenium integration tests, and ensures it is caught in a likely-train-blocking fashion when happening in production, yet without immediate user impact (no fatal exception), apart from the obvious regression in latency.