User story

As a product owner, I'd like pages to be bucketed randomly for A/B testing of rich snippets.

Background

Coming out of T299215, we can re-purpose the code artifacts from work done to add split testing to page schemas in order to bucket pages into distinct test groups (i.e. control and treatment) for the purpose of running A/B tests.

Once that random page split tester is in place, we can apply the new treatment using a WME hook to override the header of a qualified page in the treatment group, as well as log an indicator of whether the new treatment was applied on all test pages (control and treatment) for data analysis of the experiment.

Acceptance criteria

- PageSplitTester class and needed hooks are added to WME.

- The max-snippet:400 (per T299133#7707177) directive is added to the relevant meta tag for treatment pages.

- New key-value pair max-snippet is added to the X-Analytics header for both control and treatment pages.

- New key-value pair max-snippet is documented on the X-Analytics Wikitech page. << TK once patch passes code review

Developer notes

The PageSplitTester class was introduced in T206868 (relevant patch) and removed in T209377 (relevant patch).

Config for the sameAs property A/B test was introduced in T208755 (relevant patch) and removed in T209377 (relevant patch). We can leverage similar config for the current rich snippets A/B instrument when it is ready to be deployed.

The first step is to reconfigure the PageSplitTester class (originally done in the Wikibase extension) to be part of WikimediaEvents:

- Add a new instrumentation class with updated methods/properties of the PageSplitTester class to WME.

- Add isSchemaTreatmentActiveForPageId to WikimediaEventsHooks.php

- Check if the current page is in the treatment group using isSchemaTreatmentActiveForPageId and add hook onOutputPageAfterGetHeadLinksArray to the new class to change the value of $tags['meta-robots'] if it exists, or add the tag if it is missing.

- Append max-snippet:400 to the content key of $tags['meta-robots'] for treatment pages only.

- For both treatment and control pages, inside the onOutputPageAfterGetHeadLinksArray hook, add a boolean value for a new max-snippet key in the X-Analytics header - true for treatment, false for control.

QA steps on local

- To test the bucketing, sampling, and max-snippets locally, you'll need to install the XAnalytics extension and add the following config in local settings:

wfLoadExtension( 'XAnalytics' ); $wgWMEPageSchemaSplitTestSamplingRatio = 1;

- You will also need a few pages created locally (not via content provider) in order to get bonafide pageIds for bucketing/sampling.

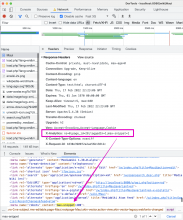

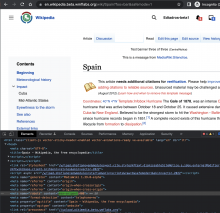

- Navigate to a page and check the response headers. For pages in the treatment group, you will see "max-snippet=1" in the X-Analytics response header and a corresponding meta robots tag with the max-snippet directive in the console:

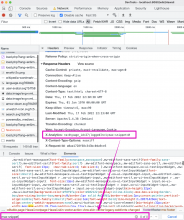

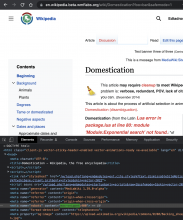

- Navigate to another locally created page until you find one that has the X-Analytics response with "max-snippet=0" and no max-snippet directive in any robots meta tag. This means the page is sampled and bucketed in the control group:

- To test unsampled pages (which should have neither max-snippet in X-Analytics header or in console, navigate to a local page that is populated by content provider. These will have a null pageId and therefore not contain any of the max-snippets.

- To make sure pages are not sampled and not bucketed when the A/B test is disabled, comment out $wgWMEPageSchemaSplitTestSamplingRatio from local settings.

QA steps on beta cluster

- See T301584#7812096 to test sample urls (with cache-busting query params) for the robots meta tag containing the max-snippet directive. X-Analytics header (though extension is installed) is not presently visible in the response headers on beta cluster and still being investigated.

QA Results - Beta

| AC | Status | Details |

|---|---|---|

| 1 | ✅ | T301584#7864568 |