Notifications are meant to be read. Issues with notification systems tend to result on notifications being ignored, overlooked or result in delays for the user to notice..

When considering improving the visibility of notifications (T113228), the control of volume (T100528), or the way those are presented to align with the priorities of our users (T108190), we may want to measure the impact it has in terms of helping reduce the general backlog of unread notifications with respect to the former baseline.

To support the above, some understanding on users responsiveness with notifications will be helpful. Some aspects to measure:

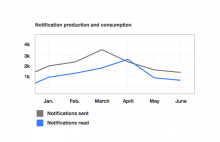

- Monthly production and consumption of notifications. Number of notifications created and number of notifications read for 30 day periods. For example, "In August, 1000 notifications were sent and 500 were read. In September, 600 notifications were sent and 700 were read, etc.".

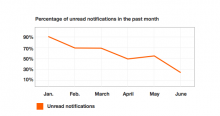

- Distribution of unread notifications. Percentage of notifications generated during 30 days that remain unread the next 30 days. For example, "30% of the notifications from August remained unread at the end of September".

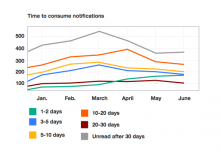

- Distribution of response time. Number of notifications generated in a 30 days period that were consumed in 1-2 days, 3-5 days, ... 20-30 days or remain unread after the next 30 days. For example, "500 of the 1000 notifications generated in August were consumed in less than 2 days".

Some possible visualisations are shown below: