Error

- mwversion: 1.41.0-wmf.17

- reqId: 9a603a79-5158-4fc8-b88b-355068caab24

- Find reqId in Logstash

MediaWiki\Extension\Notifications\Api\ApiEchoUnreadNotificationPages::getUnreadNotificationPagesFromForeign: Unexpected API response from {wiki}data.error.*: See https://www.wikidata.org/w/api.php for API usage. Subscribe to the mediawiki-api-announce mailing list at <https://lists.wikimedia.org/postorius/lists/mediawiki-api-announce.lists.wikimedia.org/> for notice of API deprecations and breaking changes. data.error.code: badtoken data.error.info: The centralauthtoken is not valid.

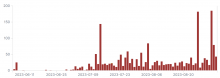

Impact

Unknown, unable to reproduce.

Notes

Happens when ApiEchoUnreadNotificationPages is invoked in the secondary DC, stores a centralauthtoken, and makes a cross-DC request to the Echo API on another wiki, signed with that token; reading the token from the store in the other DC fails.

(Ideally the API request should be made within the current DC, but the routing logic in MWHttpRequest is naive and always sends MediaWiki requests to the primary: T347781: MWHttpRequest should not route read requests to the primary DC)

Mostly happens for requests initiated from MW-on-k8s although there are a few such errors happening on non-k8s servers as well (~99% of all errors is on k8s).