This task involves the work of identifying the technical approaches we will consider using for splitting an arbitrary range of text/content into discrete sentences.

Decision(s) to be made

- 1. What – if any – technical approach is accurate and reliable enough for the Editing Team to depend on for identifying discrete sentences for the purpose of Edit Check being able to "automatically place a reference at the end of a sentence."

Investigation output

Per what @DLynch proposed in T324363#8561900, we will strive to make a prototype (or series of prototypes depending on how many approaches seem viable) that we can use in Patch demo to evaluate how effective a given approach is at splitting arbitrary content into discrete sentences.

Findings

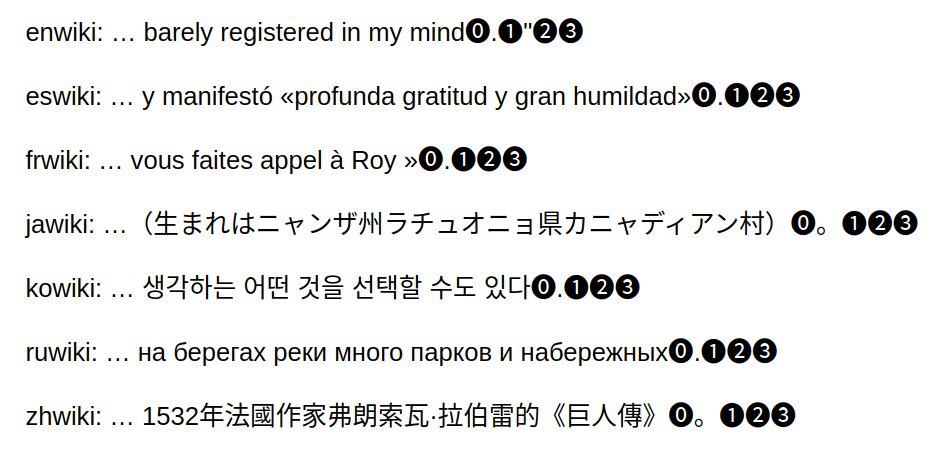

- Not currently able to support sentence splitting in Thai

- Reason being: Thai does not have punctuation to signify the end of a sentence which the current sentence splitting approach depends on/cues off of.

Open question(s)

- 1. What languages will a given sentence splitting approach need to work in for us to consider it viable?

Done

- Next steps are documented for all Decision(s) to be made

- Answers to all Open question(s) are documented

- Next steps are identified for all Findings (should there be any)