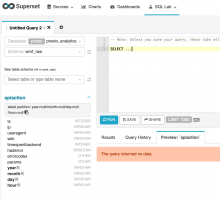

Action API traffic data (counts, user agents, errors, backend latency) are collected in the ApiAction tables in Hadoop. Currently the only way to use them is by logging in to the stats box and manually running Hive queries, which is not too useful for product management. We should expose them somehow.

This is probably although not necessarily blocked on T137321: Run ETL for wmf_raw.ActionApi into wmf.action_* aggregate tables (making the data collection more production-like).