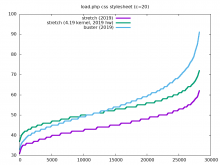

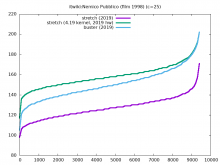

It appears that appservers, especially API servers, have their performance negatively affected by upgrading from stretch to buster.

This ticket is to investigate why that is.

some graph screenshots here: https://wikitech.wikimedia.org/wiki/Appserver-buster-upgrade-2021#Is_it_actually_getting_slower?

numbers provided by joe:

Taking mw1403 (stretch) vs mw1405 - appservers. Values in milliseconds. Average values over one day as measured by envoy:

p50 p75 p90 p99

stretch 144 218 383 1879

buster 149 224 415 1996

diff % +3.5 +2.7 +8.3 +6

Now the same for API servers, specifically mw1404 (buster) vs mw1402 (stretch)

p50 p75 p90 p99

stretch 73 95 207 1028

buster 81 129 239 1533

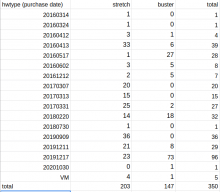

diff % +11 +36 +11 +49spreadsheet with data which server is buster and which is stretch, when they have been purchased, what the reimage progress is:

https://docs.google.com/spreadsheets/d/1Ris18-joRFfd3OHjGJIraVUk-bpmIRORsPoms9D7BcM/edit?usp=sharing