- On slow mobile connections, the default quality setting for thumbnails produces thumbnails which are impractically large. We should optimize for speedy delivery rather by serving low-res thumbnails by default.

- We use srcset to serve high DPI images to mobile devices that have a display with a high pixel density. However, that is not the only factor that should be relevant; equally (or more) important is the user's connection speed. We should not serve high DPI images to users on a 2G connection by default, even if the device is capable of displaying them.

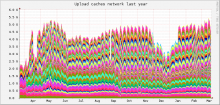

Payload size of [[en:Barack Obama]] with and without proposed modifications:

Plan

- Use historic NavigationTiming data to assign a speed rating of "slow" or "fast" to each country / region pair.

- Have Varnish set a NetSpeed=slow or NetSpeed=fast cookie based on geolocation.

- Vary the cache on NetSpeed cookie. (Or rather: in vcl_hash, update the hash if req.http.Cookie ~ "(^|;\s*)NetSpeed=slow").

- Have MobileFrontend handle NetSpeed=slow requests differently, by omitting the srcset attribute and rewriting JPEG URLs to use the qlow variant.

A/C

- In mobile web srcset should not be present

- In apps srcset should be present in API response

- Change default images served to mobile web users to be low-res images

- Update the option in SPecial:MobileOptions from data-saving mode to be 'hi-res mode' (invert behaviour)