I recieved the following notififcation in Wikidata:Project Chat

Um eine große Verzögerung in der Datenreplikation zu vermeiden, wurde diese Transaktion abgebrochen. Die Schreibdauer (5.179366350174) hat die Grenze von 3 Sekunden überschritten. Falls du viele Objekte auf einmal änderst, versuche stattdessen, die Änderungen auf mehrere Operationen aufzuteilen.

[XqnWOApAICoAAAV@60QAAAAV] 2020-04-29 19:32:18: Fataler Ausnahmefehler des Typs „Wikimedia\Rdbms\DBTransactionSizeError“

I recieved yesterday also a error in editing the Wikidata:Project Chat.

Description

Details

2020-04-29 19:32:18 [XqnWOApAICoAAAV@60QAAAAV] mw1321 wikidatawiki 1.35.0-wmf.30 exception ERROR: [XqnWOApAICoAAAV@60QAAAAV] /w/index.php?title=Wikidata:Project_chat&action=submit Wikimedia\Rdbms\DBTransactionSizeError from line 1647 of /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/loadbalancer/LoadBalancer.php: Transaction spent 5.179366350174 second(s) in writes, exceeding the limit of 3 {"exception_id":"XqnWOApAICoAAAV@60QAAAAV","exception_url":"/w/index.php?title=Wikidata:Project_chat&action=submit","caught_by":"mwe_handler"}

[Exception Wikimedia\Rdbms\DBTransactionSizeError] (/srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/loadbalancer/LoadBalancer.php:1647) Transaction spent 5.179366350174 second(s) in writes, exceeding the limit of 3

#0 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/loadbalancer/LoadBalancer.php(2150): Wikimedia\Rdbms\LoadBalancer->Wikimedia\Rdbms\{closure}(Wikimedia\Rdbms\DatabaseMysqli)

#1 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/loadbalancer/LoadBalancer.php(1661): Wikimedia\Rdbms\LoadBalancer->forEachOpenMasterConnection(Closure)

#2 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/lbfactory/LBFactory.php(244): Wikimedia\Rdbms\LoadBalancer->approveMasterChanges(array, string, integer)

#3 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/lbfactory/LBFactoryMulti.php(222): Wikimedia\Rdbms\LBFactory->Wikimedia\Rdbms\{closure}(Wikimedia\Rdbms\LoadBalancer, string, array)

#4 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/lbfactory/LBFactory.php(246): Wikimedia\Rdbms\LBFactoryMulti->forEachLB(Closure, array)

#5 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/lbfactory/LBFactory.php(305): Wikimedia\Rdbms\LBFactory->forEachLBCallMethod(string, array)

#6 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(670): Wikimedia\Rdbms\LBFactory->commitMasterChanges(string, array)

#7 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(640): MediaWiki::preOutputCommit(RequestContext, Closure)

#8 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(989): MediaWiki->doPreOutputCommit(Closure)

#9 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(535): MediaWiki->main()

#10 /srv/mediawiki/php-1.35.0-wmf.30/index.php(47): MediaWiki->run()

#11 /srv/mediawiki/w/index.php(3): require(string)

#12 {main}| Status | Subtype | Assigned | Task | ||

|---|---|---|---|---|---|

| Resolved | Release | brennen | T249962 1.35.0-wmf.30 deployment blockers | ||

| Resolved | PRODUCTION ERROR | daniel | T251457 LoadBalancer: Transaction spent [n] second(s) in writes, exceeding the limit of [n] |

Event Timeline

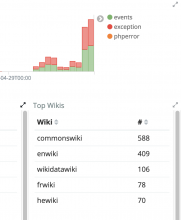

This is still happening now that 1.35.0-wmf.30 (T249962) is on all wikis, at a rate of ~100 in the last hour or so.

I mistakenly believed this was fully accounted for, but I now think it likely should have been a blocker. Seeking advice.

It is just a big transaction that takes more than the limit we have for writes, which is 3 seconds.

Nothing we can do from the DB infra.

Ok, per @Marostegui and conversation in RelEng I'm removing this as a blocker. That said it does seem clear that something changed here at least in terms of the error reporting:

This is preventing writes from happening with that particular action,so it must indeed be fixed or whatever caused that increase on time, reverted I would say.

It was a not so long text I wanted to add maybe two secentences with 20 words and 200 characters.

Definitely a new problem, and it is causing about 200 user actions per hour to be aborted/prevented (presumably edits, but may be other stuff as well).

Possibly caused by changes to EditPage this train?

- rMW4721717527f9: Replace uses and hard deprecate Article:: and WikiPage::getRevision

- rMWd4e06a3d658a: Hard deprecate WikiPage::getUndoContent

- rMWca8aa4d6b25e: Replace uses and hard deprecate Article::getRevisionFetched

- rMW257fd5237e23: EditPage::showHeader - only warn editing an old revision if it exists

Change 593578 had a related patch set uploaded (by Brennen Bearnes; owner: Brennen Bearnes):

[operations/mediawiki-config@master] Group0 only to 1.35.0-wmf.30

Change 593578 merged by jenkins-bot:

[operations/mediawiki-config@master] Group0 only to 1.35.0-wmf.30

Looking at the requestId of one of these errors, the following log messages are found: https://logstash.wikimedia.org/goto/3e387a16e01ae0f02e72b413d6691539

The interesting one there is:

Sub-optimal transaction on DB(s) [10.64.16.34 (frwiki) (TRX#7ad8fc)]:

0 3.366758 query-m: SELECT GET_LOCK('X', N) AS lockstatus [TRX#7ad8fc]

1 0.000420 query-m: SELECT RELEASE_LOCK('X') as lockstatus [TRX#7ad8fc]

2 0.000425 query-m: INSERT INTO `comment` (comment_hash,comment_text,comment_data) VALUES (N,'X',NULL) [TRX#7ad8fc]

3 0.000508 query-m: INSERT INTO `revision` (rev_page,rev_parent_id,rev_minor_edit,rev_timestamp,rev_deleted,rev_len,rev_sha1) VALUES (N,...,N,'X') [TRX#7ad8fc]

4 0.000354 query-m: INSERT INTO `revision_comment_temp` (revcomment_rev,revcomment_comment_id) VALUES (N,...,N) [TRX#7ad8fc]

5 0.000382 query-m: INSERT INTO `revision_actor_temp` (revactor_rev,revactor_actor,revactor_timestamp,revactor_page) VALUES (N,...,N,'X',revactor_page = N [TRX#7ad8fc]

6 0.005014 query-m: INSERT INTO `blobs_cluster2N` (blob_text) VALUES ('X')

7 0.000426 query-m: INSERT INTO `text` (old_id,old_text,old_flags) VALUES (NULL,'X') [TRX#7ad8fc]

8 0.000362 query-m: INSERT INTO `content` (content_size,content_sha1,content_model,content_address) VALUES (N,'X') [TRX#7ad8fc]

9 0.000423 query-m: INSERT INTO `slots` (slot_revision_id,slot_role_id,slot_content_id,slot_origin) VALUES (N,...,N) [TRX#7ad8fc]

10 0.000452 query-m: UPDATE `page` SET page_latest = N,page_touched = 'X' WHERE page_id = N [TRX#7ad8fc]The really long one seems to be SELECT GET_LOCK('X', N) AS lockstatus

Interesting. RevisionStore::insertRevisionRowOn() does uses GET_LOCK when it detects the auto-increment value of the revision table to be out of sync. This was introduced as a fix for T202032: Duplicate ar_rev_id values in several wikis.

I suppose multiple saves detecting this, and all attempting to acquire the same lock, can lead to a pile-up, causing some such requests to time out.

The pile-up could perhaps be avoided by intropducing a randomized delay before trying to acquire the lock. But that may still cause the transaction to time out.

The big question is - why does the auto-increment value get out of whack? Was there a master switch? As far as I know, the original cause of T202032 should no longer happen with recent versions of Maria...

@daniel I don't think this is that lock showing up here. Reading the code, it seems like if that lock was taken, we should've seen a bunch more queries in this transaction, like some deletes, read from the archive table etc.

Quick answer from my side: no master switch has been done in the last few months on any of our s1-s8, x1 sections

The other associated log actually gives more insight on which lock is making the transaction timeout:

Expectation (writeQueryTime <= 1) by MediaWiki::main not met (actual: 3.366758108139):

query-m: SELECT GET_LOCK('X', N) AS lockstatus [TRX#7ad8fc]

#0 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/TransactionProfiler.php(255): Wikimedia\Rdbms\TransactionProfiler->reportExpectationViolated('writeQueryTime', Object(Wikimedia\Rdbms\GeneralizedSql), 3.366758108139)

#1 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1396): Wikimedia\Rdbms\TransactionProfiler->recordQueryCompletion(Object(Wikimedia\Rdbms\GeneralizedSql), 1588267688.055, true, 1)

#2 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1278): Wikimedia\Rdbms\Database->executeQueryAttempt('SELECT GET_LOCK...', 'SELECT /* Media...', true, 'MediaWiki\\Stora...', 136)

#3 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1211): Wikimedia\Rdbms\Database->executeQuery('SELECT GET_LOCK...', 'MediaWiki\\Stora...', 136)

#4 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/DatabaseMysqlBase.php(1216): Wikimedia\Rdbms\Database->query('SELECT GET_LOCK...', 'MediaWiki\\Stora...', 136)

#5 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/PageEditStash.php(324): Wikimedia\Rdbms\DatabaseMysqlBase->lock('frwiki:stashedi...', 'MediaWiki\\Stora...', 30)

#6 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/PageEditStash.php(222): MediaWiki\Storage\PageEditStash->getAndWaitForStashValue('frwiki:stashedi...')

#7 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/DerivedPageDataUpdater.php(778): MediaWiki\Storage\PageEditStash->checkCache(Object(Title), Object(WikitextContent), Object(User))

#8 /srv/mediawiki/php-1.35.0-wmf.30/includes/page/WikiPage.php(2044): MediaWiki\Storage\DerivedPageDataUpdater->prepareContent(Object(User), Object(MediaWiki\Storage\RevisionSlotsUpdate), true)

#9 /srv/mediawiki/php-1.35.0-wmf.30/extensions/SpamBlacklist/includes/SpamBlacklistHooks.php(30): WikiPage->prepareContentForEdit(Object(WikitextContent))

#10 /srv/mediawiki/php-1.35.0-wmf.30/includes/HookRunner/HookContainer.php(283): SpamBlacklistHooks::filterMergedContent(Object(DerivativeContext), Object(WikitextContent), Object(Status), 'Proposition de ...', Object(User), false)Good point. So, other candidates are:

- SqlBagOStuff::lock, which is called indirectly but a lot of different caching related things, including WANObjectCache. Which in turn is used by NameTableStore, but we should only be doing reads on that during normal operation, and not locking the database... I hope.

- PageEditStash. Should only be read, not written to, while saving a new revision.

EDIT: Ah, @Pchelolo found it: PageEditStash->getAndWaitForStashValue() is indeed a read, but still uses the lock. Why it is slow here, I have no idea.

So, PageEditStash::parseAndCache() parses the page while holding the lock. PageEditStash->getAndWaitForStashValue() waits for that lock, and it apparently does so within the DB transaction that will be used to write the new revision. If parsing takes long, the process waiting for the lock may wait for long, and the transaction may time out.

Solution: PageEditStash->getAndWaitForStashValue() should be called ouside the transaction. Don't know yet how hard or easy that would be.

Thanks @Pchelolo for digging up that stacktrace. I really need to improve my Kibana-Fo

I'm not aware of any changes in that area.

How frequent is this? It's possible that it's just a rare combination of a slow parse and several edit stash updates.

@Pchelolo can you provide the rest of the stack trace? This is triggered via SpamBlacklistHooks, and I want to understand why that is being executed inside the DB transaction.

Complete stack trace:

Expectation (writeQueryTime <= 1) by MediaWiki::main not met (actual: 3.366758108139):

query-m: SELECT GET_LOCK('X', N) AS lockstatus [TRX#7ad8fc]

#0 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/TransactionProfiler.php(255): Wikimedia\Rdbms\TransactionProfiler->reportExpectationViolated('writeQueryTime', Object(Wikimedia\Rdbms\GeneralizedSql), 3.366758108139)

#1 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1396): Wikimedia\Rdbms\TransactionProfiler->recordQueryCompletion(Object(Wikimedia\Rdbms\GeneralizedSql), 1588267688.055, true, 1)

#2 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1278): Wikimedia\Rdbms\Database->executeQueryAttempt('SELECT GET_LOCK...', 'SELECT /* Media...', true, 'MediaWiki\\Stora...', 136)

#3 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/Database.php(1211): Wikimedia\Rdbms\Database->executeQuery('SELECT GET_LOCK...', 'MediaWiki\\Stora...', 136)

#4 /srv/mediawiki/php-1.35.0-wmf.30/includes/libs/rdbms/database/DatabaseMysqlBase.php(1216): Wikimedia\Rdbms\Database->query('SELECT GET_LOCK...', 'MediaWiki\\Stora...', 136)

#5 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/PageEditStash.php(324): Wikimedia\Rdbms\DatabaseMysqlBase->lock('frwiki:stashedi...', 'MediaWiki\\Stora...', 30)

#6 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/PageEditStash.php(222): MediaWiki\Storage\PageEditStash->getAndWaitForStashValue('frwiki:stashedi...')

#7 /srv/mediawiki/php-1.35.0-wmf.30/includes/Storage/DerivedPageDataUpdater.php(778): MediaWiki\Storage\PageEditStash->checkCache(Object(Title), Object(WikitextContent), Object(User))

#8 /srv/mediawiki/php-1.35.0-wmf.30/includes/page/WikiPage.php(2044): MediaWiki\Storage\DerivedPageDataUpdater->prepareContent(Object(User), Object(MediaWiki\Storage\RevisionSlotsUpdate), true)

#9 /srv/mediawiki/php-1.35.0-wmf.30/extensions/SpamBlacklist/includes/SpamBlacklistHooks.php(30): WikiPage->prepareContentForEdit(Object(WikitextContent))

#10 /srv/mediawiki/php-1.35.0-wmf.30/includes/HookRunner/HookContainer.php(283): SpamBlacklistHooks::filterMergedContent(Object(DerivativeContext), Object(WikitextContent), Object(Status), 'Proposition de ...', Object(User), false)

#11 /srv/mediawiki/php-1.35.0-wmf.30/includes/HookRunner/HookContainer.php(126): MediaWiki\HookRunner\HookContainer->callLegacyHook('EditFilterMerge...', Array, Array, Array)

#12 /srv/mediawiki/php-1.35.0-wmf.30/includes/Hooks.php(135): MediaWiki\HookRunner\HookContainer->run('EditFilterMerge...', Array, Array)

#13 /srv/mediawiki/php-1.35.0-wmf.30/includes/EditPage.php(1931): Hooks::run('EditFilterMerge...', Array)

#14 /srv/mediawiki/php-1.35.0-wmf.30/includes/EditPage.php(2388): EditPage->runPostMergeFilters(Object(WikitextContent), Object(Status), Object(User))

#15 /srv/mediawiki/php-1.35.0-wmf.30/includes/EditPage.php(1748): EditPage->internalAttemptSave(NULL, false)

#16 /srv/mediawiki/php-1.35.0-wmf.30/includes/EditPage.php(728): EditPage->attemptSave(NULL)

#17 /srv/mediawiki/php-1.35.0-wmf.30/includes/actions/EditAction.php(59): EditPage->edit()

#18 /srv/mediawiki/php-1.35.0-wmf.30/includes/actions/SubmitAction.php(38): EditAction->show()

#19 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(519): SubmitAction->show()

#20 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(305): MediaWiki->performAction(Object(Article), Object(Title))

#21 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(973): MediaWiki->performRequest()

#22 /srv/mediawiki/php-1.35.0-wmf.30/includes/MediaWiki.php(535): MediaWiki->main()

#23 /srv/mediawiki/php-1.35.0-wmf.30/index.php(47): MediaWiki->run()

#24 /srv/mediawiki/w/index.php(3): require('/srv/mediawiki/...')

#25 {main}I'm not entirely sure I understand it correctly, but PageEditStash::getAndWaitForStashValue uses ILoadBalancer::getAnyOpenConnection and by chance acquires a connection where a transaction was already open for edit?

I'm not entirely sure I understand it correctly, but PageEditStash::getAndWaitForStashValue uses ILoadBalancer::getAnyOpenConnection and by chance acquires a connection where a transaction was already open for edit?

Yes. the question is, why is there a transaction open when EditPage::runPostMergeFilters is called? In my mind, that should not be the case.

To prevent this type of issue, perhaps we want to assert in some places that no DB transaction is open. E.g. before parsing, and before doing GET_LOCK. That way, we'd flush out potential lock retention before it starts to actually trigger timeouts.

MediaWiki automatically wraps the entire request in a transaction if it encounters a write which does not do its own transaction handling. So something unrelated to saving could have started the transaction, like AbuseFilter taking an action, or a session refresh (although I would expect either to show up in Logstash).

That's true, but an automatic transaction would have been flushed before starting the explicit transaction for the revision creation, I think...

My plan of action for now:

- make database::lock() flush automatic transactions.

- make database::lock() flush warn about explicit transactions (and fail tests)

- make the Parser warn if an explicit transaction is in progress (and fail tests)

I hope that this will trigger the condition that causes this timeout in production, by making tests fail.

Change 593757 had a related patch set uploaded (by Daniel Kinzler; owner: Daniel Kinzler):

[mediawiki/core@master] Database: don't count lock() as a write operation

While I was chatting with @Krinkle on IRC, he found out that https://gerrit.wikimedia.org/r/c/mediawiki/core/+/582022 caused Database::lock() to be treated as a write operation now. This was not the case before, and indeed should not be the case. This means the error reported here is spurious, the transaction isn't actually spending a lot of time in writes.

Change 593765 had a related patch set uploaded (by Krinkle; owner: Daniel Kinzler):

[mediawiki/core@wmf/1.35.0-wmf.30] rdbms: don't treat lock() as a write operation

Change 593757 merged by jenkins-bot:

[mediawiki/core@master] rdbms: don't treat lock() as a write operation

https://gerrit.wikimedia.org/r/593757 has been merged, but has it been deployed (I assume it will need to be backported to the 1.35.0-wmf.30 branch as well)? Train it held until it has been.

UBN patches, and particularly train-blocking UBN patches, should not wait for SWAT, but be deployed immediately (in line with https://wikitech.wikimedia.org/wiki/Deployments/Emergencies).

Change 593765 merged by jenkins-bot:

[mediawiki/core@wmf/1.35.0-wmf.30] rdbms: don't treat lock() as a write operation

Mentioned in SAL (#wikimedia-operations) [2020-05-04T15:45:54Z] <jforrester@deploy1001> Synchronized php-1.35.0-wmf.30/includes/libs/rdbms/database/DatabaseMysqlBase.php: T251457 rdbms: don't treat lock() as a write operation (duration: 01m 04s)