- Affected components:

- Echo extension for MediaWiki (producer, and consumer for web).

- Mobile apps (consumer).

- Engineer for initial implementation: Michael Holloway, Mateus Santos, Bernd Sitzmann, John Giannelos

- Code steward: Product Infrastructure

- Supported push platforms: apps (v1), web (v2+)

- Target audience: registered users (v1). all users (including anons) (later)

Motivation

The Wikimedia product teams have a long-standing desire to leverage push notification technology to drive user engagement and retention.

Initial use cases

- Edit reminders: Push notification support would allow product teams to re-engage new editors by thanking them for their contributions across platforms and offering them encouragement to continue contributing to the projects.

- New contributor onboarding: Push notification support would allow Wikimedia product teams to engage new contributors by informing them about new features, settings, etc., across the product platforms.

- User-specific notifications about on-wiki events: Push notification support would allow informing users in near-real time about on-wiki actions that affect them, regardless of whether they are currently logged in. This is essentially another notification mechanism for the kinds of events handled by Echo.

Requirements

- Maintain a database of push subscriber information

- Expose a public API allowing subscribers to create, view, update, or delete their push subscriptions

- Expose a private (cluster-internal) API for requesting that a notification be pushed to one or more subscribers

- Map received notification requests to push subscriptions and forward notification requests to applicable push vendors

- Process notification requests in near-real time

Exploration

Status quo

The Echo extension to MediaWiki provides a system for defining events and associated notifications pertaining to on-wiki actions. At present, users can be notified via the Echo notifications UI on the web site, or by e-mail. Both are limited to logged-in users.

Prior art

Echo Web Push

A long-standing WIP patch from 2017 adds Web Push support to Echo. This RFC goes beyond the scope of that patch because it will support pushing notification requests to mobile app push notification providers in addition to web push.

2017 push notifications technical plan

A technical plan document for a proposed push notifications service was created in 2017, but the project never reached implementation. This RFC is heavily informed by that plan, but the primary use cases of interest have changed.

The highest product priority behind this RFC is providing notifications about on-wiki events to support new contributors. Connecting Echo to the planned push notification service is therefore in scope for this RFC. On the other hand, subscribing to page edits and topics, which were prioritized in the 2017 plan, are now out of scope for the initial investment on this RFC.

Proposal

A system for receiving and managing push notification subscriptions and transmitting notification requests to push providers.

System components

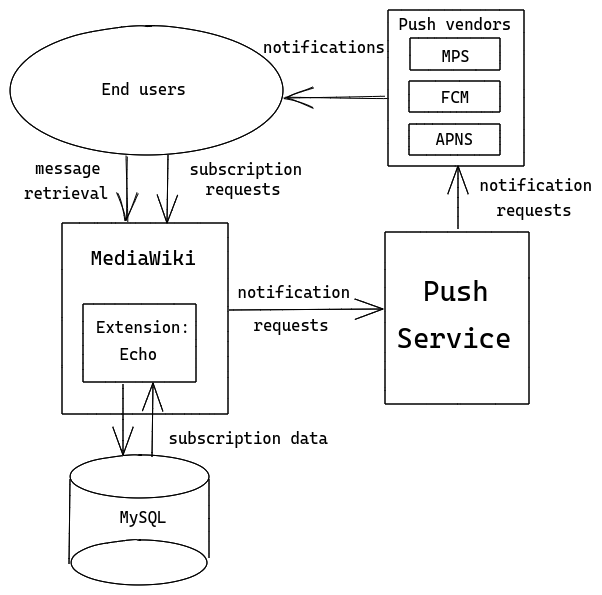

The push notifications architecture will consist of the following components:

- The primary component will be an external service for managing push subscriptions and receiving notification requests for submission to vendor push providers, including Mozilla Push Service (MPS), Apple Push Notification Service (APNS), and Google’s Firebase Cloud Messaging (FCM).

- A new push notifier type will be added to Echo to provide support for sending Echo events to the push service.

Subscription management

The system will be required to maintain subscription tokens for the appropriate push vendor for all subscribers. A public API will be created for clients to manage subscriptions. The specific endpoints available will be similar to those described in the 2017 technical plan.

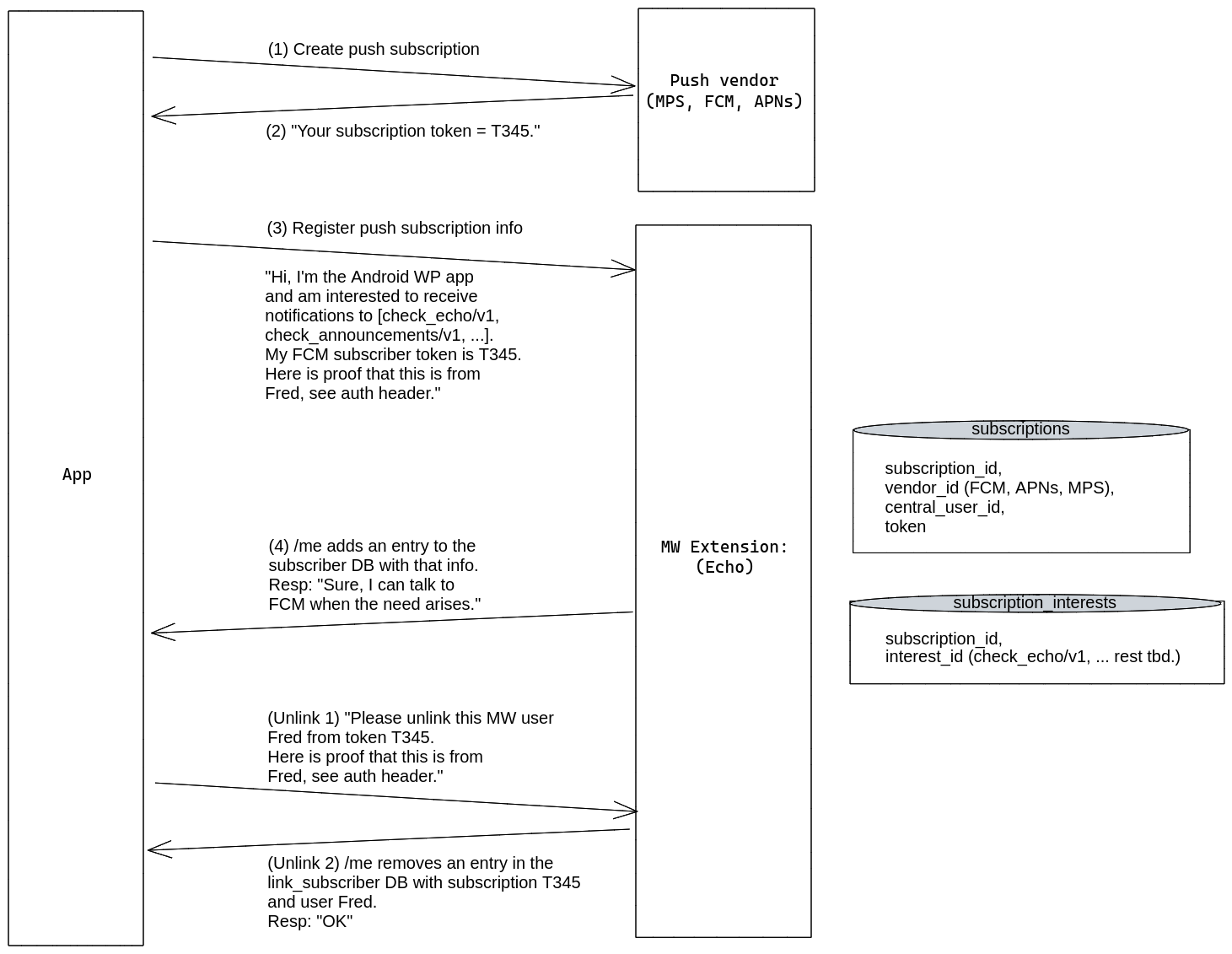

The expected subscription/unsubscription flow is as follows:

- The client obtains a push subscription token (apps) or JSON blob (web) from the platform or browser, respectively.

- The client registers the subscription token (or blob, for web) with the currently authenticated user via the Action API.

- When the association between the push subscription and the currently logged in user becomes invalid (e.g., when the user is about to log out of the website or app), it is the client's responsibility to unregister the association via the Action API.

We propose storing push subscription data in a MySQL table managed by Echo. Our priorities for subscription storage are to ensure low-latency reads when processing notification requests, and to allow for easy horizontal scaling should the service’s resource requirements increase in the future.

A draft schema for subscription storage is here.

Notification request processing

The service will expose a private (cluster-internal) HTTP REST API for receiving notification requests for processing. The specific endpoints available for notification request submission will be similar to those described in the 2017 technical plan.

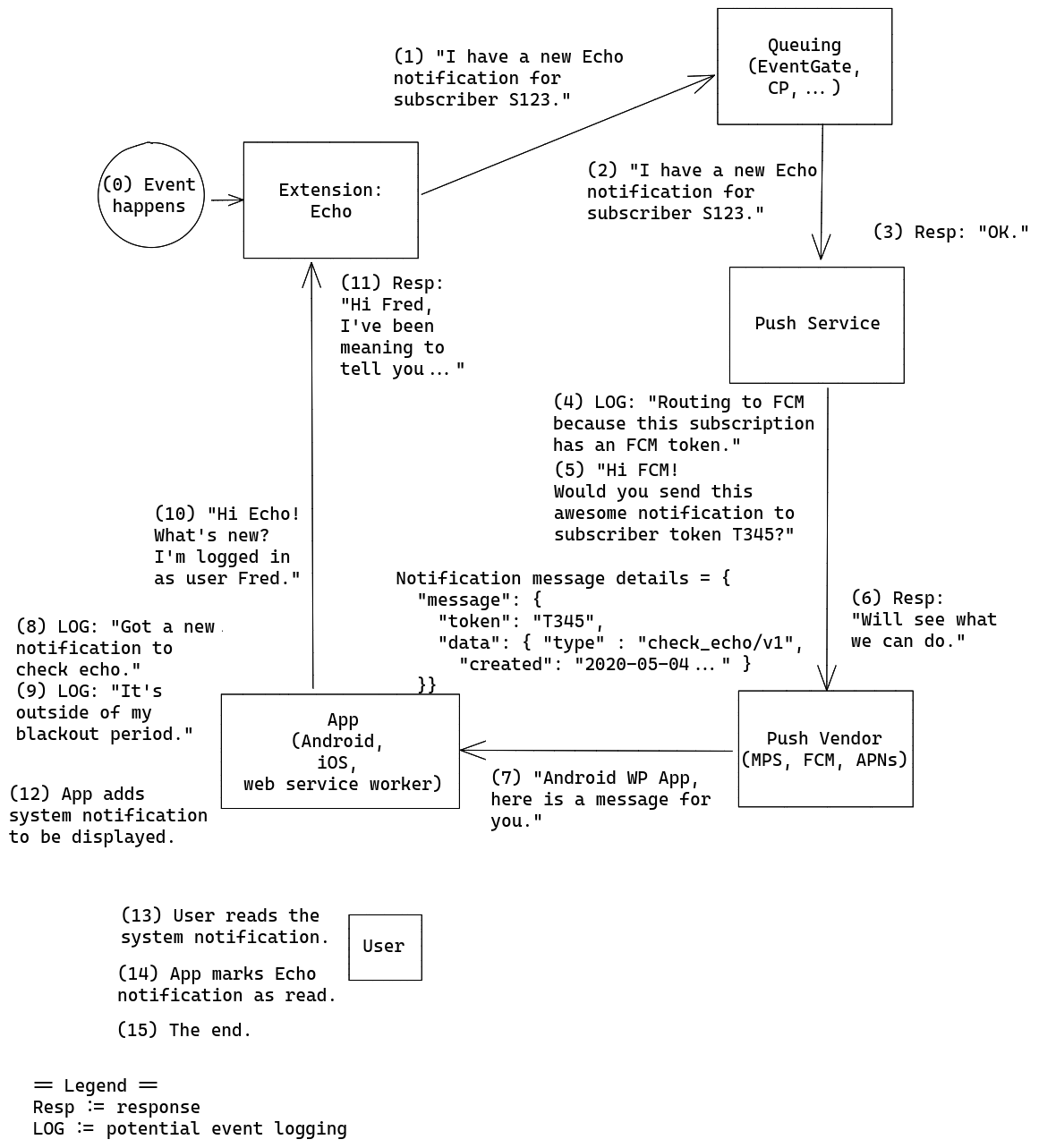

Our approach will be somewhat non-traditional, in that we will not directly push message content to clients. Rather, we will push messages that serve only to identify the type of message and where to retrieve pending messages (e.g., check_echo). This message will serve to wake up the client, which will then proceed to retrieve the messages directly from Wikimedia servers (e.g., from the Action API action=query&meta=notifications). A key strength of this approach is that maximizes user privacy by ensuring that no substantive message content passes through third-party servers. As a side benefit, it minimizes the client-side updates required for v1 in the apps, as they are currently polling the MediaWiki API for notifications while a user is logged in. The approach of pushing a message with no substantive content that prompts the client to wake up and retrieve messages is inspired by Signal.

The proposed notification request flow is as follows:

- Echo receives an event relevant to user User and emits a "push" type notification

- Asynchronously (in a deferred update or job queue job), the Echo push notifier finds the stored subscription ID for user User makes a request to the push service with the subscription ID, a message type (e.g., echo), and a timestamp

- Upon receiving the request, the push service identifies the push platform and platform subscriber identifier for the received subscription ID, and forwards the message to the relevant push service (e.g., Firebase)

- The push platform delivers the message to the user device or browser

- The receiving client wakes up and retrieves the full content of all pending messages of the relevant type from Wikimedia servers (e.g., by requesting action=query&meta=notifications)

- When a notification is read, the client follows up with a request to action=echomarkread

Current estimates project a modest level of expected incoming notification request traffic (<1 req/s), though the rate of incoming requests will likely vary by time of day, month of the year (school in or out of session), and, given the product focus on new contributors, any campaigns in effect targeting new contributors.

Notification limits per event type

The product teams require configurable limits on the number of push notifications received within a specific period of time. Further, these limits should be configurable by event type. More specific requirements of this notification limit functionality will be entered in a Phabricator task after further product manager discussion.

Metrics

We will track the Four Golden Signals: latency, traffic, errors, and saturation.

Additionally, we will track product-oriented metrics both overall and per-platform, including:

- Subscription request rate (req/s)

- Subscription deletion request rate (req/s)

- Total subscription count

Metrics must be compatible with Prometheus. Alerts will be configured for request spikes or when error rates pass a reasonable threshold.

Sunset/Rollback

Push notifications are not critical to the operation of MediaWiki, and disabling them should not negatively affect any other software component running in Wikimedia production. The push notifications infrastructure will be largely self-contained to permit easy shutdown in the event of emergency or if push notifications are no longer needed by the Wikimedia products.

Why an external service?

The Wikimedia developer community has adopted a set of criteria for assessing whether a feature may be implemented as a service external to MediaWiki. According to these criteria, the proposed functionality is suitable for implementation as an external service. The functionality is self-contained and does not depend on having a consistent and current view of MediaWiki state. It does not require direct access to the MediaWiki database, and does not require features or functionality provided by MediaWiki or its extensions. Furthermore, a push notification service will likely involve resource usage spikes that make the functionality more suitable for running in a separate, dedicated environment.

Several open-source push notification server projects exist on the web. Building in an external service will allow us to scale independently of MediaWiki, and working from an existing open-source project will allow us to build on the lessons learned by prior push notification service implementers so that we can focus the greater part of our efforts on implementing any specific custom functionality required by Wikimedia Product and ensuring that the service meets the requirements of Wikimedia’s production environment.

The push service will be written in Node.js, which we have substantial experience working with and running in production. We reviewed several existing open-source push service projects, and found that any of them would require significant updating in order to meet our product and operational requirements. None are suitable out of the box; indeed, most of the projects have been unmaintained for years. This being the case, we plan to build a new Node.js service based on the Wikimedia node service template. Implementation details on request handling and interactions with push vendors will be informed substantially by DailyMotion’s pushd project.

Why not use Change Propagation rules rather than building a new service?

The service proposed here responds to events in MediaWiki by making HTTP requests to vendor push service providers. This is similar at a high level to what the existing Change Propagation service does. But we cannot simply use Change Propagation rules in lieu of building a dedicated service, because there are some specific requirements here that go beyond what Change Propagation is meant to support. Change Propagation rules should be stateless, but we will need to be able to do things like batch outgoing requests or conditionally enqueue requests for submission at a later time. We may also need to implement one or more strategies currently under discussion with Privacy Engineering for mitigating risks to user privacy, such as sending "decoy" messages along with real notification requests, or introducing a randomized delay period between receipt of an Echo notification and submission of a push notification request.

Note: Separately, Echo will most likely use the MediaWiki job queue, which is backed by Change Propagation in Wikimedia's environment, for enqueuing the HTTP requests for forwarding Echo notifications to the push service.

Scope of work (Q4 2019-2020)

The Product Infrastructure team will work in Q4 to launch the baseline push notification infrastructure. This effort will include:

- Building and launching the external service to manage push subscriptions and notification requests;

- Adding a new Echo notifier type to allow for submitting events to the external push notification system; and

- Adding a table to Echo to maintain a mapping of Wikimedia central user IDs to push service subscription IDs.

Our initial focus will be on supporting push notifications in the apps. The Wikipedia apps have long been missing native push notifications, a basic feature of most mobile apps today. Supporting the apps first will also provide us with the opportunity to validate the push notification infrastructure and to work out any issues before exposing it to traffic at Wikipedia’s web scale. Support for web push will be a reach goal for initial rollout.

We anticipate that new Echo event types will be required in the future to support envisioned Product use cases for push notifications. However, this is fundamentally an infrastructure project and not a feature project, and no new Echo event types will be created as part of the initial release.

See also

Platform-specific push API docs

Web:

- https://developer.mozilla.org/en-US/docs/Web/API/Push_API

- https://developer.mozilla.org/en-US/docs/Web/API/Notifications_API

- https://developers.google.com/web/fundamentals/push-notifications

iOS:

Android: