Type of activity: Pre-scheduled session (or Unconference session if not accepted)

Main topic: How to grow our technical community / How to manage our technical debt

Etherpad: https://etherpad.wikimedia.org/p/devsummit17-code-review-processes

The problem

Whether and when code review will happen is not predictable which can be demotivating to someone who contributed a code change proposal.

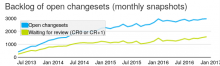

Our backlog of unreviewed/unmerged patches grows.

Concentrating on those performing reviews, potential problems might be:

- Lack of time (or CR not being prioritized by managers)

- Lack of maintainership / 'responsibility feeling'

- Lack of skill or confidence to perform {effective / good} CR

- Lack of interest in doing CR

Expected outcome

Discuss some of these items with an organizational and social focus (and mostly ignore technical aspects of CR tools) and how to improve the situation.

Exchange and document best practices, and discuss how to foster them in other development teams.

Current status of the discussion

tbd

Links

- (More specific) sequel to last time's T114419: Event on "Make code review not suck"

- Previous discussion about this very proposal

- Previous ideas and activities: https://lists.wikimedia.org/pipermail/wikitech-l/2016-March/085042.html

Quick copy & paste of Etherpad content:

Day & Time: Jan 10, 13:10

Room: Ventana

Facilitator(s): andre, kevin

Note-Taker(s): greg-g, qgil

Purpose: move towards agreement on improvements, approaches, way forward to most important aspects

Chronology:

(Andre and Kevin go through the slides.)

- Benefits of code review:

- improve quality

- share knowledge

- problem:

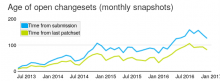

- <insert slide of open changesets per month, going up>

- time: unpredictable when a patch will be reviewed

- acceptance: unpredictable what is being reviewed/looked for

- amount: unpredictable what will be reviewed

- Aspects:

- Social, technical, organizational

- Let's get the technical aspects (Gerrit vs Differential, etc) aside; let's focus on social aspects.

- Social, technical, organizational

- Roles

- Author, Reviewer

- Past

- we've done a lot of events, had a lot of conversations, tried to improve this generally through many means

- Problems with lack of maintainers, identified maintainers, idea of a code review committee...

Problems, breaking down. (full slide)

- Resourcing

- Skill/Knowledge

- Culture

- some teams better than others

- do you look for things to review from others

- Docs/Communication

Discussion topics:

- collectively decide (define, agree on) what the most improtant problems are

- collectively decide which action(s) to take for each problem

- plan how to implement a course of action for each problem

Questions/Comments:

TimS: code review is a critical point where communicating is very important, with outside contributors or inside the organization. Be careful that you raise the minimum of quality issues, respect. Be nice to your colleagues. Reviewers tend to provide criticisms then never come back (to see the result of what the author has done to address them). Be clear about the steps missing to get the patch merged.

Aleksey: Issues about minor changes needing ot be made. Style issues also blocking the speed of review/merge.

James F: Context as a product owner. When looking at a patch for the first time it's the first time I've even known this was an issue to be addressed, so feedback can vary from small tweaks to "this isn't something we even want to do", and if contributors went through some up-front process we might avoid the latter. I find how radically different teams/repos are as being unhelpful given that I write patches against a few dozen repos. Remembering that if you don't respond to WIP changes within a month in one repo it gets abandonded but not in anothe repo

Matanya: You never know know are going to review code and when. I go to IRC and ask, but there is no official way to know who to poke and when. This doesn't scale, especially not a good experience for newcomers.

Chad: reiterate Tim's comments on being nice and nitpicking. Code review is a demotivating process when you get negative feedback. not nitpicking is super important. Code style can be automated, and should be automated. it saves everyone time and it removes the negative feeling because it is not a human who is disappointed but a machine doing a lint

Andre summarizes:

- Being friendly

- Not starting with nitpicking

- Predictable approach

- Architectural things first

- Developers should contact the "responsible team"beforehand [for bigger changes]

- Automatization of style etc.

Things we could do?

- improve documentation? there's not much for authors

- ... (missed this one)

Brian Wolff: start small before taking over the world. See what works in a small number of repos, see if it works, then expand.

Quim: My first manager at WMF used to say a patch is a gift. How you review the patch tells a lot about you. Sometimes it can represent actually money by way of time. The sheer number of hours that are in that backlog of unreviewed patches. we're throwing money away by not processing those patches.

Aleksey: re nitpicking, not even about -1, but even about just leaving a comment that something isn't correct. ignore it if it really doesn't matter. Does anyone have a code review checklist in your team? No? :(

Chase: I'm fine with nitpicking. I come from place where code review was a battle. Difference between "this won't work/this will break" and "I'd do this differently". Patches aren't always a gift. If it's about something that I don't care about I'm not going to get up 20 minutes earlier to properly review it. If I +1 a patch I am also putting my name there. Sharing responsibility with a patch. If you review my patch you are buying into this work together. Avoiding nitpicking is not a helpful narrative, but if you are asking someone +1 their code you are asking them to share responsibility.

Brian: part of the issue with nitpicking is that there can be a long time between updates on a patch back and forth so the delay over a minor thing is extending the time to merge

Tim: Sometimes we see serial nitpicking. Someone gets nitpicking, a new patch fixes, and then more nitpicking comes, from the same person or another.

Andre: more structured is wanted, less nitpicking (replacing with automated tools where appropriate). Start small with a few different projects.

Which teams want to see this problem and try to solve it?

Aleksey: checklist: we have one, I try to follow it.

Stephen: how code review works on Android. It's unusual in ways. We'er a smaller team. Don't get piles of volunteer contributions. The rest of the patches are from us paid devs. I think it is fair to say that our team we have two hats. One hat when reviewing other's code and another when we review code from a new comer. Professional developers should know conventions, and we have a social relationship. New contributor, we need to be more condescendent. Comments on a newcomer patch, they tend to be extremely enthusiastic; someone went through the process to submit it.

Different views of nitpicking: I like to get out this type of info out of reviews so I can learn "how professionals do it". i tried to make sure it is phrased in a positive way. What is a nitpicky comment? :)

Kevin: Tone is really important, nitpicky or not.

Kevin: Idea of a committee. Would people in this room participate?

Greg: What would the charter be?

Kevin: looking at these issues in turn (eg: checklists, getting agreements to review within a certain amount of time)

Quim: before discussing who would be interested, do people think this would be a solution to the problem? I think so, but maybe others not? The problem is vague. we all know our house is not so clean. What is every big team gets one person when there is a patch to review they are responsible for triaging. The success story from Android, we need more like that (stories of sucesses). Re committee: i think it will help.

Mark H: To add to that, what is the purpose of the cmmttee: enforcement or standards setting? We'd need to give them some power to slap people on the wrist if they don't follow the CoC or nitpick inappropriately. Some standardization and then the cmmtte to ensure that everyone is doing it.

Brian Woff: on metrics, if we had very good per team metrics, it would be good if they would then be posted in quarterly reviews.

Kevin: metrics can be tricky (greg: to not game them). How to make sure we don't have this coversation a year from now? Does it come from management? Hoping for a bit more of a community solution. It wouldn't just be the foundation trying to solve this problem but also the vol contribs who feel the pain. we aren't going to solve this in an hour once per year. A committee could be the way to do that.

Andre: takeaway: if we had a committee we'd have to sort out mandate, standards vs enforcement

Mark H: if volunteers how do they have any power? no budget, no ability to effect quarterly reviews

Kevin: we'd have to have Foundation participation as well. My hope would be that the committee would propose things than then would become policy, or focus peer pressure "if these teams are doing code review well, why can't other teams do the same?"

Mark H: James F, I have a good way of interacting with him. Received good feedback. From him and his team. I would suggest that people should look at his team. They have a good start. Build on that.

Seb35: I am wondering in the code review if it will be better if we can classify different trypes of code review. A specialist who could mark new patches with tags to have different types of patches and get easier reviewers for those types.

Andre: per team metrics, how teams use Gerrit? How they make sure that all the repositories that the team thinks they maintain are actually watched for new patches?

JamesF: There are 2 things we use. We create a custom dashboard in Gerrit for VisualEditor team. Gerrit allows complex queries for dashboards. [lengthy description, URLs / screenshots would be nice]. I look at that page regularly and make sure that patches is reviewed. I also subscribe to many .... and get about 1000 notifications every week, whcih might not work for others. I also have a low tech solution... [missed it].

Andre: within the foundation, is the knowledge of these custom dashboards widespread?

James: <insert description of how> yes, we should make it more widely known/used. All this doesn't help if your code happens to be in GitHub, or whatever. [There was a brief mention to Differential, the bright vague future].

Niklas L: we had a large problem of unreviewed patches (from volunteers), we tried 2 things: 1) separate tool that gets lists of open patches in our repos and says whether it needs review, who, and age. this helps to sort out which patches need review the most. Every week it is sent to the team list. 2) wrote a code review statement of intent including which repos we look at and how quickly re try to react to patches (greg: like an SLA). ... we also abandon patches after three months (?)

Andre: how do you make sure it happens (the SLA)?

Niklas: the weekly email and I hope it happens :)

Tim: what we can do to have more reviews and reduce backlog, what the committee (ArchCom?) could do. Brion and I used to review all MediaWiki codes in subversion.... How could you get someone like me to like to review. It's not about saying how this aligns to the Foundation goals. (((missed bits)))

Andre: action items?

Quim: background, the TCB/DevRel team around July we talked to Greg, Wes, the natural neighbors in Prod & Tech. we've been trying to drive it, we can organize events, raise awareness, do metrics, that's it. It was acknowledged. we can't do it anymore, it needs to be someone else. we're all still busy. Both wes and victoria and the rest of the managers are very receptive to do something. I want to propose something, whatever it is, something specific, go to management and wikitech-l, do it. SOmething that can be discussed and agreed within 3 weeks as a good enough change. Then we revist later and see if we have something better to show for ourselve

Greg: the committee idea was presented last year. It needs to be people with interest but also with... respect, the clout to make things to happen. We have this problem in the ArchCom, not approving RfCs but to get things changed at the WMF. But this is more specific. We migt have a chance to make a proposal, send it to wikitech-l, and get a committee based on the community respect, withiout having to rely on Vicotira/Wes. And start small, and keep going.

Chase: What if 2-3 months from now in one of the monthly metrics meetings or something like that, someone would present the problem, this is wht we think we should do, but it is not happening. Defining the problem is a reasonable step.

MarkH: committee that helps distribute grants, they have me have actual authority to look at grant apps and say "this is good" "this needs work", much like code review. We accept or reject. in the same way maybe there's a way to apply that model to the code review process. we want some people to come in and review this and if they review the code it's accepted. how to applymodels we already know work 9at least better than othing)

Quim: hmmm, I went blank :)

Greg: let's do the comittee to define problem, then what?

Kevin: depending on what the committee finds, maybe wes/victoria or archcommittee, or something else

Brian: probably need to convince committers on wikitech-l

Quim: ...

Action items:

- Set up a working group (which might form a committee)

- Post slide deck and link to it from these notes