Affected components: MediaWiki core, Universal Language Selector, (also many extensions indirectly like Wikibase for getting better language data)

Engineer(s) or team for initial implementation: Language Team.

Code steward: Language Team.

Motivation

There is a growing need to have language metadata readily and efficiently available in different places inside and outside MediaWiki:

- T74153: meta=siteinfo&prop=langlinks should indicate whether a language is RTL

- T187599: Implement fallback handling in Kartotherian's language selection

Definitions

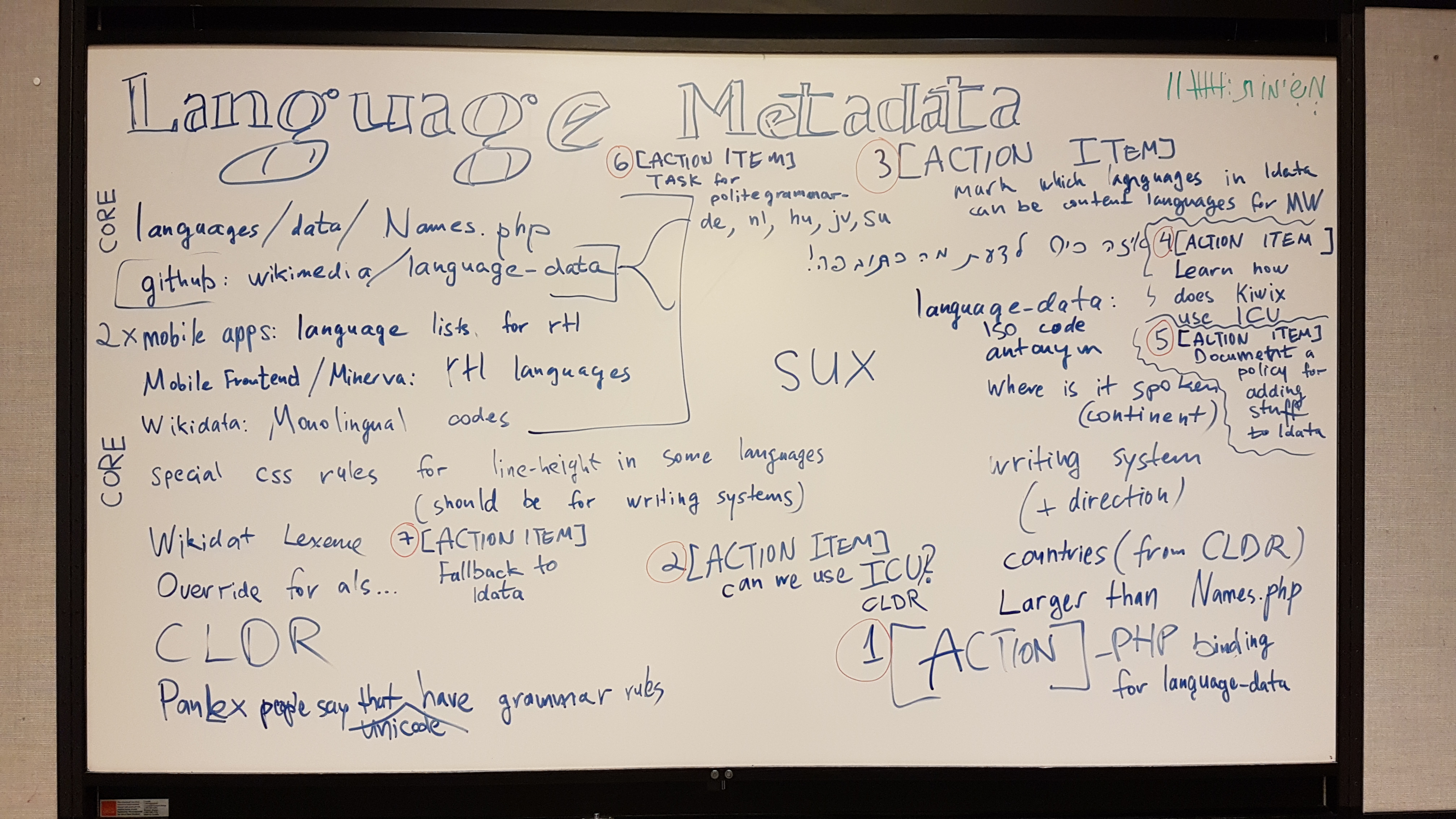

The metadata in this context means information about languages that are often required to handle languages but are not exactly about localisation. There is often need to have the language metadata efficiently available for a large set of languages. Currently the metadata consists of:

- language autonym (for language lists)

- writing direction (for language lists, for displaying text tagged in a particular language)

- writing script (for ULS language lists, possibly for fonts too)

- regions where language is spoken (for ULS language lists, automatic language selection/suggestion with GeoIP)

- fallback languages (for the fallback system to work without creating a circular dependency)

Description of issues

What we currently have in MediaWiki is only autonyms (Names.php) accessible via Language::fetchLanguageNames( Language::AS_AUTONYMS ). Writing direction and fallbacks are only available only by constructing appropriate Language objects or using Language::getLocalisationCache()->getItem(). This causes a related problem, that in order to set fallbacks or writing direction for a language, MessagesXX.php must be created, which again makes the language available for selection as an interface language in Special:Preferences. There is need to have this information available without making languages available as interface languages.

An existing database for that is https://github.com/wikimedia/language-data/ which already contains all of the above except language fallbacks.

Currently we have an overhead of updating the language metadata in multiple places: language-data itself, jquery.i18n (fallbacks), jquery.uls (copy of language-data), UniversalLanguageSelector extension (copy of jquery.uls and jquery.i18n) and MediaWiki core (Names.php, MessagesXX.php).

Exploration

To make the language metadata easy to use, and to reduce overhead of updating data in multiple different places the following actions are proposed:

1. T218639: Make language-data installable as a proper library

- Make it installable via composer and/or npm

- Make proper releases

- Consider moving it to Gerrit

2. Bring language-data to MediaWiki core

- Add the library as dependency

- Decide which format to use (YAML or JSON)

- Determine if additional caching or formats are required for performance (e.g. store as PHP code)

3. Add a mechanism for local overrides

- Similarly how we can override plurals for CLDR data

- Support two use cases: MediaWiki-customisations (e.g qqq, qqx, en-rtl) and site/farm specific customisations (outside git)

4. Replace Names.php with language-data

- Keep the existing public APIs, but replace the data

5. Add/update PHP APIs to expose data from language-data

- Similar to above, but with rest of the data

- Consider adding a new API (PHP class) to access all the metadata in a uniform way (e.g. to access a direction of a language that is not available as an interface language)

6. Add/update Action APIs to expose data from language-data

- Most Action APIs would already be updated due to them using the updated PHP APIs.

- Fix T74153: meta=siteinfo&prop=langlinks should indicate whether a language is RTL

- Consider whether it is a necessary to add new Action APIs to support frontend requirements

7. Move language fallbacks to language-data

- First copy everything to language-data, then bring in updated language-data, then update LocalisationCache to use fallbacks from there

8. Update ULS to use language-data from core if available

- ULS should be able to use language-data from core if available (to pick up local customisations) before falling back to the shipped version

- Optionally the shipped version can be dropped at later point of time (but that requires stripping it from jquery.uls that will still require it)