This is a meta-task to cover the work involved with equipping newcomers with the information and tools they need to decide how reliable experienced volunteers are likely to perceive the sourcing they're considering adding.

Background

As noted in T265163, inexperienced editors often make edits that defy the project they are editing's policies and guidelines. One such policy we see new editors break (knowingly and unknowingly) is not citing reliable sources. [i]

This task is about equipping volunteers with the information and actions they need to decide if and how they will proceed with citing the source they are attempting/considering adding to Wikipedia.

Ultimately, this fits within the larger effort to help newcomers make edits they are proud of and experienced volunteers consider useful and aligned with Wikipedia's larger objectives.

Components

Consider these components to be an evolving list...

| Component | Description | Ticket(s) | Notes |

|---|---|---|---|

| 0. | Introduce the concept of reliable sources to people adding a reference to Wikipedia for the first time | T350322 | |

| 1. | A way for volunteers, on a per project basis, to define, in a machine-readable way, what sources they reached consensus on being reliable and unreliable. | T337431 | |

| 2. | A way for volunteers to add to and edit the "list" described in "1." | T330112 | |

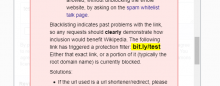

| 3. | A way for the editing interface to check a source someone is attempting to add "against" the "list" described in "1." | T349261 | |

| 4. | A way to make the person editing aware, in real-time, when they have added a source that defies the project's policies | T347531 | |

| 5. | Information that helps newcomers decide how likely experienced volunteers are to perceive the source they're adding as reliable in this specific context | T350319 | |

| 6. | A way for volunteers to audit/see the edits reference reliability feedback is shown within | T350622 | |

| 7. | A way for volunteers to report issues with the reference reliability feedback they see Edit Check providing | T343168 | |

| 8. | A way for experienced volunteers to define what message people are presented with when attempting to cite a source a project has developed a reliability-related consensus around | T337431 | |

Links

Research

- Research:ReferenceRisk via @FNavas-foundation

- Gender and country biases in Wikipedia citations to scholarly publications via @Pablo

- WikiCite: Editing Awareness and Trust Experiments via @cmadeo

- Research:Analyzing sources on Wikipedia via @Isaac.

- Sources investigation and PoC via @FNavas-foundation

- Longitudinal Assessment of Reference Quality on Wikipedia via @Pablo

- The % of English Wikipedia sentences missing a citation has dropped by 20% in the last decade, with more than half of verifiable statements now accompanying references. The % of non-authoritative sources has remained below 1% over the years as a result of community efforts.

- Editors with more experience tend to make better changes in terms of reference quality by adding missing references and removing potentially risky sources.

- New editors who have co-edited an article with an experienced editor on the same day are more likely to avoid risky references in future edits compared to their peers who have not.

- Multilingual Wikipedia perennial source list (preprint avail. soon) via @Pablo

- Some sources deemed untrustworthy in one language continue to appear in articles across other languages.

- Non-authoritative sources found in the English version of a page tend to persist in other language versions of that page.

- Wikipedia Source Controversiality Metrics via @Pablo

- First findings suggest that statements backed with reliable sources tend to receive less edits than those with unreliable sources (case study: enwiki articles on climate change; reliability taken from the perennial sources list)

Relevant conversations

- MediaWiki

- @Sdkb writes in "So glad to see!": "... we'll absolutely want control over the source list there, so that we can modify it as RSP changes, and ideally we'll want to be able to provide context/specific conditions for (sometimes-)unreliable sources, as it's far from just a binary reliable/unreliable switch."

- English Wikipedia

- Easier_new_articles_suggestion_for_non-contributors

- "With this new proposed UI we could have a clear summary of the criteria for notability and eligibility on Wikipedia. The current requested articles system does not explicitly put those critera on the page, instead users need to navigate elsewhere to find them." -- [https://en.wikipedia.org/wiki/User:Elli](User:Elli)

- "... requested articles should require RS for example." -- [https://en.wikipedia.org/wiki/User:Elli](User:Elli)

- " If we do require RS, we would still need to the community to somehow vet the sources." -- User:Philipp.governale

- " We could even attach an edit filter that barred it if there weren't at least 2 URLs in it." --User:Nosebagbear

- Easier_new_articles_suggestion_for_non-contributors

Tools

- WikiScore: a tool created to validate edits and count scores of participants in wikicontests. via @SEgt-WMF.

- CredBot via @ FNavas-foundation.

- User:Headbomb/unreliable via @Pablo

- The script breaks down external links (including DOIs) to various sources in different 'severities' of unreliability.

- See: User:Headbomb/unreliable#What_it_does.

- User:SuperHamster/CiteUnseen via @Pablo

- Adds categorical icons to Wikipedia citations, providing readers and editors a quick initial evaluation of citations at a glance

- User:Novem_Linguae/Scripts/CiteHighlighter via @Pablo

- Highlights 1800 sources green, yellow, or red depending on their reliability

- Credibility bot

Source lists

Listed at https://www.wikidata.org/wiki/Q59821108

- French Wikipedia

- English Wikipedia